Flink作业运行失败

|

请教一下,flink-1.11.1 yarn per job提交作业后,抛出了如下异常:

java.lang.NoClassDefFoundError: org/apache/hadoop/mapred/JobConf at java.lang.Class.getDeclaredMethods0(Native Method) at java.lang.Class.privateGetDeclaredMethods(Class.java:2701) at java.lang.Class.getDeclaredMethod(Class.java:2128) at java.io.ObjectStreamClass.getPrivateMethod(ObjectStreamClass.java:1629) at java.io.ObjectStreamClass.access$1700(ObjectStreamClass.java:79) at java.io.ObjectStreamClass$3.run(ObjectStreamClass.java:520) at java.io.ObjectStreamClass$3.run(ObjectStreamClass.java:494) at java.security.AccessController.doPrivileged(Native Method) at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:494) at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:391) at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:681) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2042) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) at java.io.ObjectInputStream.readArray(ObjectInputStream.java:1975) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1567) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:576) at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:562) at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:550) at org.apache.flink.util.InstantiationUtil.readObjectFromConfig(InstantiationUtil.java:511) at org.apache.flink.streaming.api.graph.StreamConfig.getStreamOperatorFactory(StreamConfig.java:276) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:471) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:459) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:459) at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) at org.apache.flink.streaming.runtime.tasks.OperatorChain.<init>(OperatorChain.java:155) at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:453) at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:522) at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:721) at org.apache.flink.runtime.taskmanager.Task.run(Task.java:546) at java.lang.Thread.run(Thread.java:748) Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.mapred.JobConf at java.net.URLClassLoader.findClass(URLClassLoader.java:382) at java.lang.ClassLoader.loadClass(ClassLoader.java:418) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355) at java.lang.ClassLoader.loadClass(ClassLoader.java:351) ... 87 more yarn的每个节点都配置了HADOOP_CLASSPATH,看报错信息是缺少hadoop相关的jar包,把flink-shaded-hadoop-2-2.6.5-10.0加入flink/lib下能提交成功。但是flink官方在1.11版本后不推荐flink-shaded-hadoop-2-2.6.5-10.0这种方式了,而是推荐HADOOP_CLASSPATH这种方式,但是我按照这种方式却抛出了异常,不知道是不是flink-1.11的bug,麻烦各位大神指导一下,谢谢! |

|

尝试在集群的各个节点上执行下述命令:

export HADOOP_CLASSPATH=**************** 然后执行任务提交。 在 2020-10-15 22:05:43,"gangzi" <[hidden email]> 写道: >请教一下,flink-1.11.1 yarn per job提交作业后,抛出了如下异常: >java.lang.NoClassDefFoundError: org/apache/hadoop/mapred/JobConf > at java.lang.Class.getDeclaredMethods0(Native Method) > at java.lang.Class.privateGetDeclaredMethods(Class.java:2701) > at java.lang.Class.getDeclaredMethod(Class.java:2128) > at java.io.ObjectStreamClass.getPrivateMethod(ObjectStreamClass.java:1629) > at java.io.ObjectStreamClass.access$1700(ObjectStreamClass.java:79) > at java.io.ObjectStreamClass$3.run(ObjectStreamClass.java:520) > at java.io.ObjectStreamClass$3.run(ObjectStreamClass.java:494) > at java.security.AccessController.doPrivileged(Native Method) > at java.io.ObjectStreamClass.<init>(ObjectStreamClass.java:494) > at java.io.ObjectStreamClass.lookup(ObjectStreamClass.java:391) > at java.io.ObjectStreamClass.initNonProxy(ObjectStreamClass.java:681) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readNonProxyDesc(ObjectInputStream.java:1885) > at java.io.ObjectInputStream.readClassDesc(ObjectInputStream.java:1751) > at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2042) > at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) > at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) > at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) > at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) > at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) > at java.io.ObjectInputStream.readArray(ObjectInputStream.java:1975) > at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1567) > at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) > at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) > at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) > at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) > at java.io.ObjectInputStream.defaultReadFields(ObjectInputStream.java:2287) > at java.io.ObjectInputStream.readSerialData(ObjectInputStream.java:2211) > at java.io.ObjectInputStream.readOrdinaryObject(ObjectInputStream.java:2069) > at java.io.ObjectInputStream.readObject0(ObjectInputStream.java:1573) > at java.io.ObjectInputStream.readObject(ObjectInputStream.java:431) > at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:576) > at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:562) > at org.apache.flink.util.InstantiationUtil.deserializeObject(InstantiationUtil.java:550) > at org.apache.flink.util.InstantiationUtil.readObjectFromConfig(InstantiationUtil.java:511) > at org.apache.flink.streaming.api.graph.StreamConfig.getStreamOperatorFactory(StreamConfig.java:276) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:471) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:459) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createChainedOperator(OperatorChain.java:459) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.createOutputCollector(OperatorChain.java:393) > at org.apache.flink.streaming.runtime.tasks.OperatorChain.<init>(OperatorChain.java:155) > at org.apache.flink.streaming.runtime.tasks.StreamTask.beforeInvoke(StreamTask.java:453) > at org.apache.flink.streaming.runtime.tasks.StreamTask.invoke(StreamTask.java:522) > at org.apache.flink.runtime.taskmanager.Task.doRun(Task.java:721) > at org.apache.flink.runtime.taskmanager.Task.run(Task.java:546) > at java.lang.Thread.run(Thread.java:748) >Caused by: java.lang.ClassNotFoundException: org.apache.hadoop.mapred.JobConf > at java.net.URLClassLoader.findClass(URLClassLoader.java:382) > at java.lang.ClassLoader.loadClass(ClassLoader.java:418) > at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:355) > at java.lang.ClassLoader.loadClass(ClassLoader.java:351) > ... 87 more > >yarn的每个节点都配置了HADOOP_CLASSPATH,看报错信息是缺少hadoop相关的jar包,把flink-shaded-hadoop-2-2.6.5-10.0加入flink/lib下能提交成功。但是flink官方在1.11版本后不推荐flink-shaded-hadoop-2-2.6.5-10.0这种方式了,而是推荐HADOOP_CLASSPATH这种方式,但是我按照这种方式却抛出了异常,不知道是不是flink-1.11的bug,麻烦各位大神指导一下,谢谢! |

|

我按照flink官方文档的做法,在hadoop集群每个节点上都:export HADOOP_CLASSPATH =`hadoop classpath`,但是报:java.lang.NoClassDefFoundError: org/apache/hadoop/mapred/JobConf

不知道这个是不是flink的bug,按照这个报错,是缺少:hadoop-mapreduce-client-core.jar这个jar包,但是这个包是在/usr/local/hadoop-2.10.0/share/hadoop/mapreduce/*:这个目录下的,这个目录是包含在HADOOP_CLASSPATH上的,按理说是会加载到的。 > 2020年10月16日 上午9:59,Shubin Ruan <[hidden email]> 写道: > > export HADOOP_CLASSPATH=**************** |

|

你看看TM的log,里面有CLASSPATH的

gangzi <[hidden email]> 于2020年10月16日周五 上午10:11写道: > 我按照flink官方文档的做法,在hadoop集群每个节点上都:export HADOOP_CLASSPATH =`hadoop > classpath`,但是报:java.lang.NoClassDefFoundError: > org/apache/hadoop/mapred/JobConf > > 不知道这个是不是flink的bug,按照这个报错,是缺少:hadoop-mapreduce-client-core.jar这个jar包,但是这个包是在/usr/local/hadoop-2.10.0/share/hadoop/mapreduce/*:这个目录下的,这个目录是包含在HADOOP_CLASSPATH上的,按理说是会加载到的。 > > > 2020年10月16日 上午9:59,Shubin Ruan <[hidden email]> 写道: > > > > export HADOOP_CLASSPATH=**************** > > -- Best Regards Jeff Zhang |

|

TM 的CLASSPATH确实没有hadoop-mapreduce-client-core.jar。这个难道是hadoop集群的问题吗?还是一定要shade-hadoop包,官方不推荐shade-hadoop包了。

> 2020年10月16日 上午10:50,Jeff Zhang <[hidden email]> 写道: > > 你看看TM的log,里面有CLASSPATH的 > > gangzi <[hidden email]> 于2020年10月16日周五 上午10:11写道: > >> 我按照flink官方文档的做法,在hadoop集群每个节点上都:export HADOOP_CLASSPATH =`hadoop >> classpath`,但是报:java.lang.NoClassDefFoundError: >> org/apache/hadoop/mapred/JobConf >> >> 不知道这个是不是flink的bug,按照这个报错,是缺少:hadoop-mapreduce-client-core.jar这个jar包,但是这个包是在/usr/local/hadoop-2.10.0/share/hadoop/mapreduce/*:这个目录下的,这个目录是包含在HADOOP_CLASSPATH上的,按理说是会加载到的。 >> >>> 2020年10月16日 上午9:59,Shubin Ruan <[hidden email]> 写道: >>> >>> export HADOOP_CLASSPATH=**************** >> >> > > -- > Best Regards > > Jeff Zhang |

|

你是hadoop2 吗?我记得这个情况只有hadoop3才会出现

gangzi <[hidden email]> 于2020年10月16日周五 上午11:22写道: > TM > 的CLASSPATH确实没有hadoop-mapreduce-client-core.jar。这个难道是hadoop集群的问题吗?还是一定要shade-hadoop包,官方不推荐shade-hadoop包了。 > > > 2020年10月16日 上午10:50,Jeff Zhang <[hidden email]> 写道: > > > > 你看看TM的log,里面有CLASSPATH的 > > > > gangzi <[hidden email]> 于2020年10月16日周五 上午10:11写道: > > > >> 我按照flink官方文档的做法,在hadoop集群每个节点上都:export HADOOP_CLASSPATH =`hadoop > >> classpath`,但是报:java.lang.NoClassDefFoundError: > >> org/apache/hadoop/mapred/JobConf > >> > >> > 不知道这个是不是flink的bug,按照这个报错,是缺少:hadoop-mapreduce-client-core.jar这个jar包,但是这个包是在/usr/local/hadoop-2.10.0/share/hadoop/mapreduce/*:这个目录下的,这个目录是包含在HADOOP_CLASSPATH上的,按理说是会加载到的。 > >> > >>> 2020年10月16日 上午9:59,Shubin Ruan <[hidden email]> 写道: > >>> > >>> export HADOOP_CLASSPATH=**************** > >> > >> > > > > -- > > Best Regards > > > > Jeff Zhang > > -- Best Regards Jeff Zhang |

|

我的是hadoop-2.10,flink-sql-connector-hive-1.2.2_2.11-1.11.1.jar。

> 2020年10月16日 下午12:01,Jeff Zhang <[hidden email]> 写道: > > 你是hadoop2 吗?我记得这个情况只有hadoop3才会出现 > > > gangzi <[hidden email]> 于2020年10月16日周五 上午11:22写道: > >> TM >> 的CLASSPATH确实没有hadoop-mapreduce-client-core.jar。这个难道是hadoop集群的问题吗?还是一定要shade-hadoop包,官方不推荐shade-hadoop包了。 >> >>> 2020年10月16日 上午10:50,Jeff Zhang <[hidden email]> 写道: >>> >>> 你看看TM的log,里面有CLASSPATH的 >>> >>> gangzi <[hidden email]> 于2020年10月16日周五 上午10:11写道: >>> >>>> 我按照flink官方文档的做法,在hadoop集群每个节点上都:export HADOOP_CLASSPATH =`hadoop >>>> classpath`,但是报:java.lang.NoClassDefFoundError: >>>> org/apache/hadoop/mapred/JobConf >>>> >>>> >> 不知道这个是不是flink的bug,按照这个报错,是缺少:hadoop-mapreduce-client-core.jar这个jar包,但是这个包是在/usr/local/hadoop-2.10.0/share/hadoop/mapreduce/*:这个目录下的,这个目录是包含在HADOOP_CLASSPATH上的,按理说是会加载到的。 >>>> >>>>> 2020年10月16日 上午9:59,Shubin Ruan <[hidden email]> 写道: >>>>> >>>>> export HADOOP_CLASSPATH=**************** >>>> >>>> >>> >>> -- >>> Best Regards >>> >>> Jeff Zhang >> >> > > -- > Best Regards > > Jeff Zhang |

|

In reply to this post by Jeff Zhang

|

|

In reply to this post by gangzi

看了下代码,发现 flink on yarn 模式的 TM/JM 没有把 Mapreduce 相关 jar 包放到 yarn container CLASSPATH 中,我建了个 jira 跟踪这个问题:

https://issues.apache.org/jira/browse/FLINK-23449 |

|

In reply to this post by gangzi

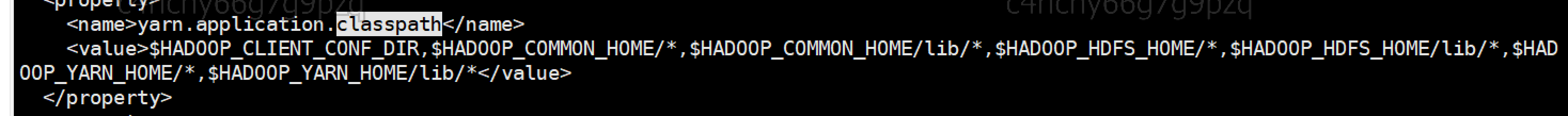

这个问题是因为yarn的配置中设置的application.classpath没有将hadoop MapReduce相关包加进去导致的,

可以在这里修改yarn配置来解决,或者按照chenkai同学的方式PR来解决

|

«

Return to Apache Flink 中文用户邮件列表

|

1 view|%1 views

| Free forum by Nabble | Edit this page |